The Arbitrators are contacting Indexer address 0x9082f497bdc512d08ffde50d6fce28e72c2addcf and fisherman 0x4208ce4ad17f0b52e2cadf466e9cf8286a8696d5 for a new dispute filed in the protocol.

Dispute ID: 0x46b08fee8095ff927a2041b6dfbd97c6f405497a1e320eec558d1a19bef9c03f

Allocation: 0x716f07b2252e186e643a6096f858c524bd92712b

Subgraph: QmdKXcBUHR3UyURqVRQHu1oV6VUkBrhi2vNvMx3bNDnUCc

@InspectorPOI, could you share the insights or data you gathered that led to you filing the dispute? Please provide all relevant information and records about the open dispute. This will likely include POIs generated for the affected subgraph(s).

About the Procedure

The Arbitration Charter regulates the arbitration process. You can find it in Radicle project ID rad:git:hnrkrhnth6afcc6mnmtokbp4h9575fgrhzbay or at GIP-0009: Arbitration Charter.

Please use this forum for all communications.

Arbitration Team.

Dear Arbitration Team,

I am submitting a dispute against holographic-indexer (0x9082f497bdc512d08ffde50d6fce28e72c2addcf) for closing allocation on the Graph Network Arbitrum subgraph without being fully synced.

According to The Graph Explorer, their status indicates they are not synced. I have attached screenshots taken recently to support this claim.

Specifically, this indexer has previously closed two additional allocations under similar circumstances:

| Allocation ID |

| 0x008eafdd9b95bc552d2e09fd68ad714e3b4a5bfc |

| 0x1f54bf6bfda5e0ac6ba999fa623ead2ffa17a773 |

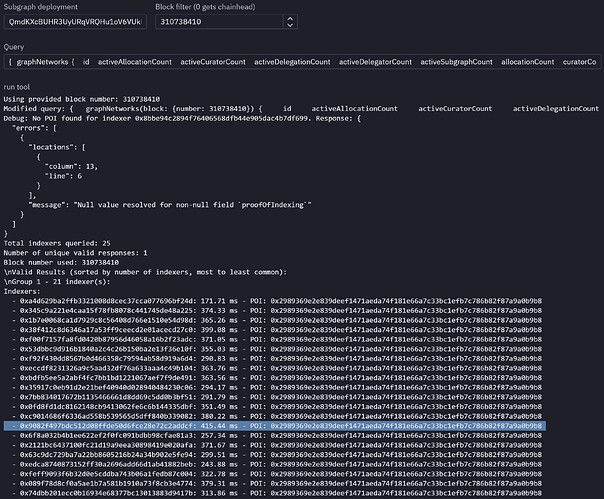

The earliest of these, 0x1f54bf6bfda5e0ac6ba999fa623ead2ffa17a773, was closed at the start block #310738410 on the Arbitrum-One network. Upon cross-referencing with the Query Cross-Checking Tool, it is evident that the indexer was not fully synced up to this block at the time of closure. This pattern also suggests that the disputed indexer closed further allocations up to the most recent one.

Dear Arbitration Team,

The Graph Network Arbitrum subgraph was effectively synced by the time our indexer closed the allocations, and the POI was presented by the indexer agent without any manual intervention. The only reason you don’t see it synced at the time of opening the dispute #33 on April 18th, 2025 is that we had to resync all of our subgraphs due to critical database failure happening on April 12th, 2025. Most subgraphs are back to synced state while others are still syncing.

This is the crash log from our database, which failed to replay the WAL log during restart attempts. Although we successfully reset the WAL log, the database remained inconsistent due to table failures during indexing. Following this incident, we made the decision to resync all subgraphs from scratch.

2025-04-12 17:08:58.654 UTC [1] LOG: listening on IPv6 address "::", port 5432

2025-04-12 17:08:58.655 UTC [1] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.5432"

2025-04-12 17:08:58.685 UTC [30] LOG: database system was interrupted while in recovery at 2025-04-12 17:08:21 UTC

2025-04-12 17:08:58.685 UTC [30] HINT: This probably means that some data is corrupted and you will have to use the last backup for recovery.

2025-04-12 17:09:28.325 UTC [1101] FATAL: the database system is not yet accepting connections

2025-04-12 17:09:28.325 UTC [1101] DETAIL: Consistent recovery state has not been yet reached.

2025-04-12 17:09:28.325 UTC [1102] FATAL: the database system is not yet accepting connections

2025-04-12 17:09:28.325 UTC [1102] DETAIL: Consistent recovery state has not been yet reached.

2025-04-12 17:09:29.517 UTC [1] LOG: startup process (PID 30) was terminated by signal 11: Segmentation fault

For clarification purposes we attach a timeline of the events regarding this subgraph:

- February 13th, 2025: Opened allocation

0x1f54bf6bfda5e0ac6ba999fa623ead2ffa17a773

- February 28th, 2025: Closed (synced) allocation

0x1f54bf6bfda5e0ac6ba999fa623ead2ffa17a773

- February 28th, 2025: Opened allocation

0x008eafdd9b95bc552d2e09fd68ad714e3b4a5bfc

- March 3rd, 2025: Closed (synced) allocation

0x008eafdd9b95bc552d2e09fd68ad714e3b4a5bfc

- April 7th, 2025: Opened allocation

0x716f07b2252e186e643a6096f858c524bd92712b

- April 12th, 2025: Closed (synced) allocation

0x716f07b2252e186e643a6096f858c524bd92712b

- April 12th, 2025: Opened allocation

0xb26e1dca48e57f4594bc2896ed14698014b4b1dd

- April 12th, 2025: >> Critical database failure. We start a resync of subgraphs from scratch.

- April 18th, 2025: Dispute filed

We didn’t close any allocation with arbitrary POIs and an unsynced state, neither we have closed 0xb26e1dca48e57f4594bc2896ed14698014b4b1dd as the subgraph is still syncing.

Please advise what additional proof you need. We have retained the database directory before resyncing. An effective validation of the POIs requires comparing them against other indexers’ submissions, rather than checking the current sync state.

Best.

Dear @megatron,

Thank you for your response and the details provided.

The POI comparison tool indicates that a majority of indexers have reached consensus on block #310738410, except for the disputed indexer, which currently shows no POI found. Additionally, The Graph Explorer displays that synchronization is still in progress.

While I understand that database corruption is an unfortunate and sometimes unavoidable issue, I would like to confirm my understanding of the timeline you’ve provided to ensure accuracy and prevent any misunderstandings.

To elaborate:

- On

February 13th, 2025, you began syncing the network subgraph and opened the first allocation 0x1f54bf6bfda5e0ac6ba999fa623ead2ffa17a773.

- By

February 28th, the network subgraph was fully synced and allocation 0x1f54bf6bfda5e0ac6ba999fa623ead2ffa17a773 was closed.

- Subsequently, the subgraph remained synced all until a critical database failure occurred on

April 12th, 2025, then you initiated resync of subgraphs from scratch.

Could you please confirm if this understanding is accurate?

Hi Inspector_POI,

The timeline is correct. The reason that the subgraph is not returning the POI through the GraphQL interface is because it’s still re-syncing since we started that process in April 12th, 2025 after the crash. We are currently at block #250945455for that subgraph while you are querying #310738410.

However, the POI was effectively present every time we closed an allocation before our database crash in April 12th, 2025 since we had the fully synced version at the time.

Thank you very much for your response.

Could you please run the following SQL query on both your retained database directory (subgraph db) prior to the failure and your current database that is syncing? This will help us verify the state of the data before the incident. You may attach the results here.

SELECT

c.relname AS table_name,

pg_size_pretty(pg_total_relation_size(c.oid)) AS total_size,

pg_size_pretty(pg_relation_size(c.oid)) AS table_size,

pg_size_pretty(pg_indexes_size(c.oid)) AS index_size,

COALESCE(s.n_live_tup, 0) AS estimated_rows

FROM

pg_class c

JOIN pg_namespace n ON n.oid = c.relnamespace

LEFT JOIN pg_stat_user_tables s ON s.relid = c.oid

WHERE

n.nspname = 'sgdxxx' -- Your namespace here for the subgraph

AND c.relkind = 'r'

ORDER BY

pg_total_relation_size(c.oid) DESC;

Additionally, by chance if you have any indexer-agent logs also, that is related to the allocation closure, we would greatly appreciate it if you could share them. These logs are crucial for understanding the sequence of events leading up to the failure.

Hi Inspector_POI,

Below you can find the indexer agent logs related to the closed allocation and the table data from the live database.

We are still working on moving the backed up crashed database to a new server to run a postgres instance and get you the other query you requested.

{"level":30,"time":1744455814346,"pid":1,"hostname":"722a8eb22524","name":"IndexerAgent","component":"AllocationManager","protocolNetwork":"eip155:42161","action":404,"deployment":{"bytes32":"0xde95b1515f9c2d330d15bd2b651dc4613bda455ed3a59d9b95a110fda34fd5d5","ipfsHash":"QmdKXcBUHR3UyURqVRQHu1oV6VUkBrhi2vNvMx3bNDnUCc"},"allocation":"0x716f07b2252e186E643a6096f858c524Bd92712B","indexer":"0x9082F497Bdc512d08FFDE50d6FCe28e72c2AdDcf","amountGRT":"2000.0","poi":"0x574a3d0f0ea89bc0bbbb3809663c04899de7c5e59149c414586876d5bd8623ee","transaction":"0x4550a4282f353045081246a19cf6ca39c86dd0160210e9e387c2a5d851c277c1","indexingRewards":{"type":"BigNumber","hex":"0x319a7dcadf1a81c0"},"msg":"Successfully closed allocation"}

| table_name |

total_size |

table_size |

index_size |

estimated_rows |

| graph_network |

4239 MB |

1677 MB |

2561 MB |

1501266 |

| graph_account |

4236 MB |

832 MB |

3404 MB |

4423147 |

| indexer |

1250 MB |

318 MB |

932 MB |

553001 |

| poi2$ |

969 MB |

306 MB |

663 MB |

2937869 |

| allocation |

880 MB |

209 MB |

671 MB |

406485 |

| delegated_stake |

786 MB |

173 MB |

613 MB |

573202 |

| subgraph_deployment |

538 MB |

142 MB |

395 MB |

359574 |

| delegator |

358 MB |

110 MB |

248 MB |

484157 |

| retryable_ticket_redeem_attempt |

191 MB |

56 MB |

135 MB |

265895 |

| subgraph |

186 MB |

49 MB |

137 MB |

68386 |

| retryable_ticket |

137 MB |

40 MB |

97 MB |

265895 |

| epoch |

34 MB |

9312 kB |

25 MB |

47523 |

| subgraph_deployment_manifest |

34 MB |

14 MB |

7072 kB |

12109 |

| subgraph_deployment_schema |

33 MB |

10040 kB |

4080 kB |

12105 |

| curator |

24 MB |

6464 kB |

18 MB |

17391 |

| name_signal |

22 MB |

4760 kB |

17 MB |

12454 |

| data_sources$ |

21 MB |

13 MB |

7480 kB |

47619 |

| signal |

20 MB |

4888 kB |

15 MB |

13385 |

| subgraph_version |

17 MB |

5640 kB |

12 MB |

12664 |

| signal_transaction |

13 MB |

3584 kB |

10000 kB |

13473 |

| current_subgraph_deployment_relation |

12 MB |

3616 kB |

8840 kB |

15559 |

| subgraph_meta |

12 MB |

5504 kB |

6640 kB |

10490 |

| indexer_query_fee_payment_aggregation |

11 MB |

2928 kB |

8432 kB |

10972 |

| bridge_deposit_transaction |

11 MB |

3480 kB |

7832 kB |

9552 |

| name_signal_transaction |

9024 kB |

2392 kB |

6592 kB |

9474 |

| payment_source |

8480 kB |

2224 kB |

6216 kB |

10987 |

| subgraph_version_meta |

7792 kB |

3144 kB |

4608 kB |

12664 |

| name_signal_subgraph_relation |

6008 kB |

1808 kB |

4160 kB |

7889 |

| bridge_withdrawal_transaction |

1248 kB |

288 kB |

920 kB |

1006 |

| pool |

1024 kB |

208 kB |

776 kB |

2314 |

| graph_account_meta |

432 kB |

104 kB |

288 kB |

246 |

| dispute |

328 kB |

8192 bytes |

280 kB |

6 |

| token_lock_wallet |

224 kB |

0 bytes |

216 kB |

0 |

| attestation |

152 kB |

0 bytes |

144 kB |

0 |

| authorized_function |

112 kB |

0 bytes |

104 kB |

0 |

| token_manager |

112 kB |

0 bytes |

104 kB |

0 |

| graph_account_name |

112 kB |

0 bytes |

104 kB |

0 |

Thanks for your cooperation. Looking forward to the results from the crashed database.

Hey Inspector_POI,

The subgraph (QmdKXcBUHR3UyURqVRQHu1oV6VUkBrhi2vNvMx3bNDnUCc) sync have just caught up with the block in dispute for allocation 0x716f07b2252e186e643a6096f858c524bd92712b.

Can you please run your tooling again to check for the POI validity? I just tried with the a local script and it’s matching with the one we presented for that allocation.

If required, we can also pg_dump the current table which includes all entities and POIs that lead to that result. Let me know.

Thanks

For the network subgraph, I’ve checked your status, and the public POI checks out .

I have also used a more recent block and it seems you are well synced.

Dear Arbitration Team,

I don’t believe I have anything further to add beyond the last requested SQL query results from the crashed database. Please advise whether this is still required or if the materials provided by the disputed indexer thus far are deemed sufficient.

Once again thank you for your efforts @megatron.

Inspector_POI, this is the result of the requested query on the crashed database.

| table_name |

total_size |

table_size |

index_size |

estimated_rows |

| graph_account |

5259 MB |

975 MB |

4283 MB |

0 |

| graph_network |

4926 MB |

1988 MB |

2938 MB |

0 |

| pg_temp_20050464 |

1980 MB |

1980 MB |

0 bytes |

0 |

| allocation |

1422 MB |

403 MB |

1019 MB |

0 |

| indexer |

1244 MB |

361 MB |

883 MB |

0 |

| poi2$ |

1095 MB |

352 MB |

742 MB |

0 |

| subgraph_deployment |

849 MB |

265 MB |

583 MB |

0 |

| delegated_stake |

696 MB |

176 MB |

519 MB |

0 |

| delegator |

370 MB |

113 MB |

257 MB |

0 |

| subgraph |

314 MB |

96 MB |

218 MB |

0 |

| retryable_ticket_redeem_attempt |

212 MB |

63 MB |

149 MB |

0 |

| retryable_ticket |

146 MB |

45 MB |

101 MB |

0 |

| epoch |

65 MB |

21 MB |

45 MB |

0 |

| indexer_query_fee_payment_aggregation |

49 MB |

14 MB |

35 MB |

0 |

| subgraph_deployment_manifest |

44 MB |

18 MB |

7040 kB |

0 |

| subgraph_deployment_schema |

43 MB |

13 MB |

4176 kB |

0 |

| payment_source |

36 MB |

10 MB |

25 MB |

0 |

| curator |

31 MB |

9488 kB |

22 MB |

0 |

| name_signal |

25 MB |

6224 kB |

18 MB |

0 |

| signal |

24 MB |

6808 kB |

18 MB |

0 |

| subgraph_version |

24 MB |

7608 kB |

17 MB |

0 |

| data_sources$ |

24 MB |

17 MB |

6624 kB |

0 |

| signal_transaction |

18 MB |

4968 kB |

14 MB |

0 |

| current_subgraph_deployment_relation |

18 MB |

5248 kB |

13 MB |

0 |

| subgraph_meta |

13 MB |

6592 kB |

6632 kB |

0 |

| bridge_deposit_transaction |

11 MB |

3816 kB |

7872 kB |

0 |

| name_signal_transaction |

11 MB |

2968 kB |

8128 kB |

0 |

| subgraph_version_meta |

9088 kB |

4184 kB |

4864 kB |

0 |

| name_signal_subgraph_relation |

7128 kB |

2096 kB |

4992 kB |

0 |

| bridge_withdrawal_transaction |

1528 kB |

376 kB |

1112 kB |

0 |

| pool |

960 kB |

208 kB |

712 kB |

0 |

| graph_account_meta |

400 kB |

112 kB |

248 kB |

0 |

| dispute |

336 kB |

16 kB |

280 kB |

0 |

| token_lock_wallet |

224 kB |

0 bytes |

216 kB |

0 |

| attestation |

152 kB |

0 bytes |

144 kB |

0 |

| token_manager |

112 kB |

0 bytes |

104 kB |

0 |

| authorized_function |

112 kB |

0 bytes |

104 kB |

0 |

| graph_account_name |

112 kB |

0 bytes |

104 kB |

0 |

All,

After reviewing the evidence, including logs from @megatron and observations and data from @Inspector_POI, the Arbitration Council has decided to resolve the dispute as a draw.

We appreciate the detailed evidence, logs, and observations provided, as they were invaluable in helping the Arbitration Council reach an informed decision. We also recognize the time and effort you both dedicated to submitting clear and thorough information, which ultimately strengthens the integrity and transparency of the dispute resolution process.

The Arbitrators

2 Likes